The Great AI Speed Mismatch

The real race in AI isn’t just about algorithms — it’s about megawatts and metal.

While the latest AI models can be deployed in a matter of weeks, it can take up to two years for a data center to become fully operational, assuming no delays. This mismatch in deployment time between physical infrastructure and software intelligence has created a significant bottleneck for the rapid growth of AI.

Given AI's hunger for power and GPUs, legacy data centers have failed to meet the demands of new models. Even when models like DeepSeek’s R1 show promising results using less compute power on older GPUs, the overarching trend remains: models will still require immense power to improve in the coming years (more on EpochAI).

AI infrastructure can be broken down into three primary construction project types:

The data center itself, which houses the semiconductors and supporting IT equipment required to perform AI operations.

Electricity generation facilities (i.e., power plants), which produce the electricity delivered to the data center.

Transmission infrastructure (e.g., power lines), used to transmit electricity from its source. (more on Anthropic).

In recent Deep Dives at Nido we’ve talked about how the immense energy appetite of AI is a well-documented challenge, with demand projected to soar in the coming years (more in ConteNIDO). However, even if the US meets this power demand, two other key construction project components are necessary to complete the AI ecosystem.

What differentiates a regular data center from a hyper scale data center is that a hyper scale facility is built and operated by a company for its own large-scale use, with at least 100 megawatts (MW) of output power and tens of thousands of identical servers, in contrast to legacy data centers that could be fitted into the basement of a building. Hyper scale data centers are primarily designed for the intensive demands of AI training, rather than AI inference. In summary, AI training is the first step when an AI model is learning new capabilities from existing data and AI inference is when a trained model is applying the capabilities to new data (more on NVIDIA, Cloudfare).

Why Does It Take So Long to Build for AI?

The first step in building a data center is securing various pre-construction permits at the federal, state, and local levels, covering both land use and environmental regulations. Securing some of these can take up to a decade, and all permits must be cleared before construction can begin. To have a data center operational in 2028, for instance, all necessary permits would need to be ready by 2026. The current administration has made efforts to accelerate the construction of hyper scale data centers. However, due to supply chain bottlenecks, it is uncertain if their executive order will remain in effect when the next president takes office. By that time, the data centers being developed today will be in the final steps of being connected. As Jamie Dimon said in an interview with the Acquired podcast, "The government you treat with today is not the one you will be treating with tomorrow."

Another major issue are difficulties in building a supply chain for the specialized hardware required to run hyper scale data centers. The supply chain is stressed by the sudden surge in demand for necessary components. There is a notable shortage of generators and switchgears(more on McKinsey).The supply chain is so complicated that Crusoe, the company building OpenAI’s Stargate project, has opted to manufacture these switchgears in-house rather than wait for the supply chain to recover. Industrial-grade generators have also become scarce, as they are used across many industries, including healthcare, manufacturing, mining, oil & gas.

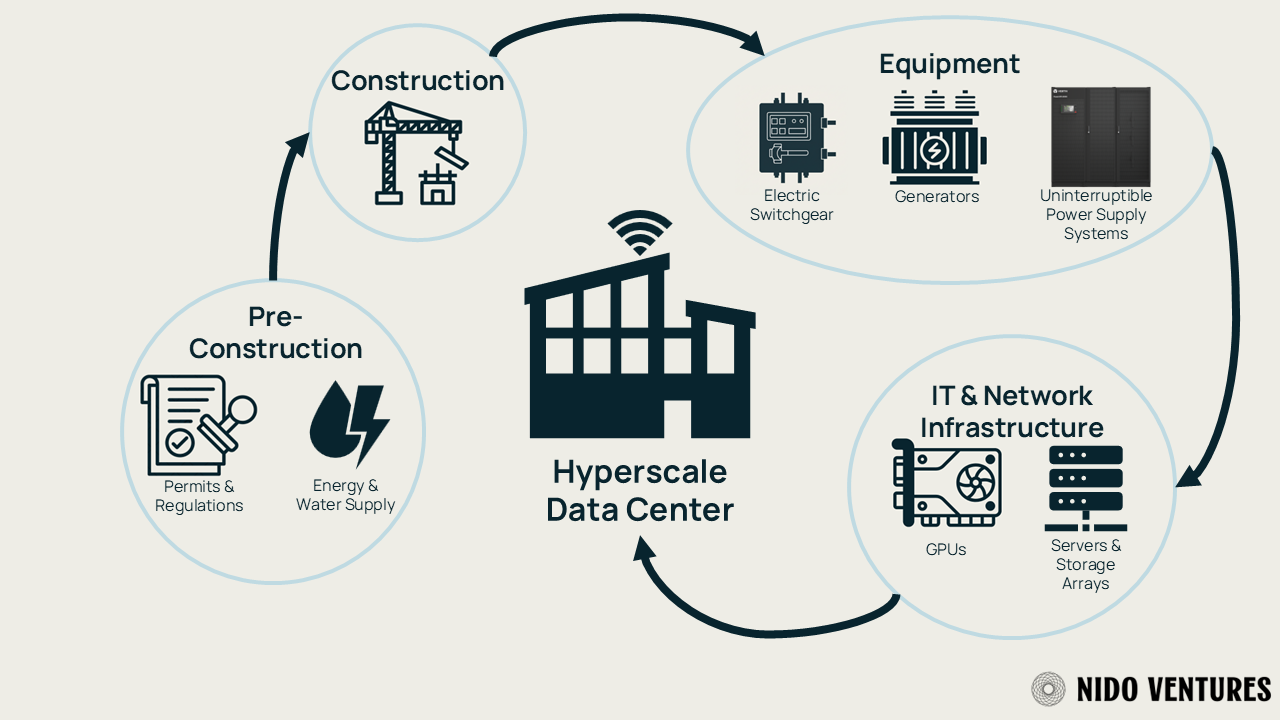

Figure 1. Supply chain of a hyper scale data center

The power grid itself presents a distribution problem. Much of the US power grid was developed in the 1970s and had not faced significant pressure to upgrade until recent AI demands. One workaround is to build facilities near natural gas fields to lower distribution costs. However, this requires gas turbines to power the buildings, and most companies like GE and Siemens that build them worldwide have waiting lists of two to four years (more on Bloomberg Live).

Cooling these massive sites is a significant environmental concern, as chips traditionally require vast quantities of water. Liquid cooling has emerged as a solution, but it faces challenges as an emerging technology, making it difficult to source materials and meet production demand.

From a human resources perspective, sourcing the necessary skilled labor pool to build and operate these sites is another bottleneck. There are few places in the world where a skilled labor pool and an adequate energy supply are available simultaneously.

Furthermore, concerns have been raised by communities with established data centers. These concerns relate to promises of job creation, as many positions are ultimately filled by non-local workers, and the negative impact on local power grids, which has led to service inconsistencies (more on Data Center Watch). Established hubs like Northern Virginia have faced local backlash in recent months over these issues.

Tackling the Infrastructure Gap

In response to these challenges, companies are finding more agile ways to build the data centers needed for AI. The investment driving this shift is staggering, as big tech and private equity firms open their wallets to meet the surging demand. On a macroeconomic scale, this AI capex boom is now adding almost 1% to US GDP growth (more on Sherwood).

One solution is to retrofit existing industrial buildings to meet the requirements of a data center, rather than building from the ground up. This approach was used for xAI's new site in Memphis, Tennessee, where an existing building was converted into a functional AI training data center in just 122 days (xAI). Notably, the site was chosen in March, and the permits were fast-tracked at state and local levels. To meet its energy needs without straining the local grid, the project partnered with Solaris, a Houston-based company offering Power-as-a-Service (more on Data Center Dynamics). This is a prime example of government and private industry collaborating to meet AI's computational demands.

When following a more traditional method, building these massive sites from scratch has also seen innovation. OpenAI’s Stargate project in Abilene, Texas, is being built by Crusoe, a startup that pivoted to data center infrastructure (more on Forbes). The company focuses on building near natural gas supplies to provide high-performance computing at a low cost, adapting modular data center designs. This approach has allowed them to construct the site and build the internal server infrastructure simultaneously.

Beyond the buildings, innovations are also happening with the main actors within them: the chips. These components generate constant heat, and traditional air cooling is no longer efficient. To combat this, two main solutions have emerged: direct-to-chip liquid cooling and full-immersion cooling (more on Vertiv).

On July 21st, Anthropic published a report titled “Build AI in America” with suggestions on what is needed to have a better environment for AI development. Two days later, the White House released its “America’s AI Action Plan”, along with three executive orders, one of which addresses specific permits for data centers. These actions come at a critical time and both address the need for fast-tracked permits and incentives to develop the infrastructure required to keep pace with AI's needs. This regulation specifically mentions facilities requiring over 100 megawatts (MW) of new load dedicated to AI inference, training, simulation, or synthetic data generation.

The New Data Center’s Frontier

The ideal location is crucial for ensuring 24/7 operation, and West Texas has become a favored frontier. This area is booming thanks to a combination of factors: abundant natural gas fields and wind farms, local government incentives, and available land. As many tech companies have already moved offices to Texas, it is a logical step to build the necessary computing power near their new corporate homes.

A forward-looking frontier that could be plausible in a few years' time is data centers in space, a concept the Redmond, Washington-based startup Starcloud is planning to pursue with a partnership with Nvidia. As the cost of launching payloads into space falls each year, sending solar-powered data centers to orbit could be closer than you think (more on The Economist).

This analysis raises the question why isn't Mexico a hub for these hyper scale data centers? Land is cheaper, there is proximity to capable labor pools, and infrastructure could be built to custom specifications. However, energy supply remains a primary obstacle, and there are security concerns associated with building powerful AI projects outside of the US.

Building for the Future

The potential of AI has not only created opportunities for technological development; it has also pushed physical constraints to their limits, forcing industries to react to its immense demands. This AI-driven innovation will be a much-needed catalyst for creating new alternatives in construction, energy, and manufacturing. We are already seeing advances in upgrading the existing power grid, and nuclear fission is becoming a more viable energy alternative (more on ConteNIDO). The construction of hyper scale data centers has spurred rapid solutions like prefabricated modules and in-house production of scarce equipment.

The race for AI supremacy may be fought on the technical side, but it will be won on the physical side. AI development is no longer confined to Silicon Valley. It is expanding to new frontiers where technology has not traditionally been a primary focus. These growing projects will stimulate a diverse array of sectors, including construction, robotics, energy, and engineering, and will require skilled electricians, carpenters, and more.

This urgency has accelerated progress on issues that might have otherwise developed slowly. As always, competition proves to be the best motivator for innovation. The next unicorns might not be software companies, but rather the industrial tech innovators who successfully close the AI infrastructure gap.

Written by

Great point of view! Thanks for sharing.

Great opportunity for the Mexican Industry, a lot of questions to answer!