🧠 LLM Anatomy 101: How Large Language Models "Reason"

The colossal size of modern Large Language Models (LLMs) has sparked an intriguing sense of awe and unease in even the most articulate experts. The inner workings of these models have become a mystery in many ways, hidden behind a tangled mess of mathematics and logic impossible to follow. Essentially, while the implementation of “weights” (will be explained later) remains quite transparent, the micro interactions that occur within the models are more complex to follow and interpret. Picture an LLM as a creature with a vast quantity of distinct cells, and imagine trying to keep track of every single cell interaction within that system, down to the molecular level. Just as we cannot keep track of every single molecular interaction that produces a heartbeat, it is a daunting task to interpret exactly why LLMs behave the way they do. This has led to some peculiar outputs, such as the chip designed using AI models in this paper by Princeton University, where unconventional yet efficient chips with strange, pixelated, asymmetric geometries had equal or better performance than existing chips designed by experts. The message is eerie but clear: their inner workings may be opaque, but the results are unmistakably effective.

It is still worthwhile to devote time to understanding, instead of satisfaction from performance. If we wish to eventually place them in high-stakes environments where unpredictability is a critical danger, complete intuition of how these models operate is indispensable.

At Nido, we see every day how these models fuel innovation, from startups using AI to turbocharge drug discovery to teams automating customer support with near-human fluency. Understanding how LLMs arrive at their answers isn’t just academic; it helps us spot opportunities, assess technical risk, and back founders building the next generation of AI products. By peering under the hood, we gain the confidence to fund breakthroughs that are not only powerful but also predictable and aligned with real-world needs.

Through a series of papers, Anthropic narrates their findings after developing various methods of tracing the circuitry and logic occurring within the Claude Sonnet models. Researchers at Anthropic craft a microscope aimed into Claude’s circuitry, and zoom in on the myriad of tiny interactions that yield its outputs. In the sections ahead, we’ll walk through their findings in plain language, following the clues toward whatever kind of “thinking” lives inside the code.

Reverse Engineering the LLM Brain

In their paper Circuit Tracing: Revealing Computational Graphs in Language Models, the researchers at Anthropic seek to first create a feasible method of interpreting the individual “neurons” of a neural network. But first, let’s define what a “neuron” even is.

Picture a wall covered with switches. Each switch checks the incoming signal and decides whether to flip on or stay off. In this analogy, every switch is a single neuron: it activates only when the input matches the pattern it has learned during training. (note: modern neural networks are not entirely binary, but rather they have a spectrum of “on-ness” and “off-ness”, more like a light dimmer than a switch).

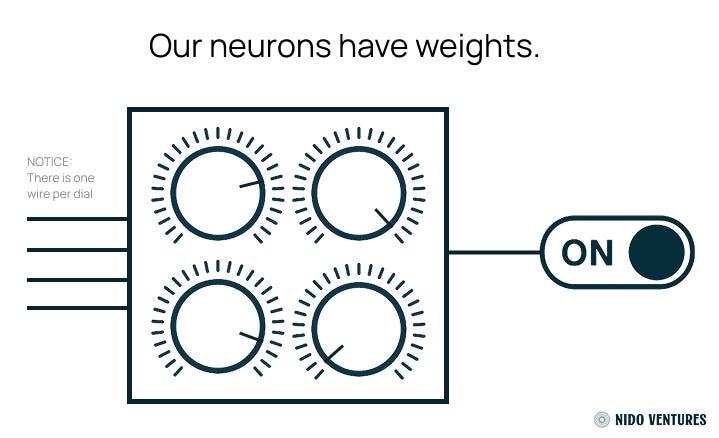

But what actually drives those switches? It’s the weights, or parameters: simple numbers that scale each input signal before it reaches a switch. Every switch sees not just one signal but a whole set: each input is multiplied by its own weight (adjusted by its own dial in our metaphor), and then all those products get summed into a single value. When that total reaches or passes the switch’s threshold, the switch flips on. You can picture the weights as a control panel full of tiny knobs. During training, the model tweaks each knob little by little until the right combination of scaled inputs makes the exact switches light up to give us the output we want.

Now imagine a vast field of dials, each one adjusted on its own whenever the model checks whether its last guess matched the target. Some estimates say modern LLMs have around 1.8 trillion of these knobs. But what signals flow through them depends on the layer you’re in. In the very first layer, each dial receives raw input values. For an image model, that might be a hexadecimal code for a pixel’s color. In a language model, it’s a coordinate vector from a “token embedding”. In extremely simplified terms, a token embedding is a list of numbers that gives every word or piece of text a unique identity. Behind the scenes, the model holds a huge table, an embedding matrix, that maps each token to its vector (list of numbers), grouping related tokens together so the model can learn how they relate. The purpose of this embedding table is to encode words in a “context aware” manner, demonstrating relationships between certain words, such as “dad” and “mom”.

We take each number from the embedded token’s list and send it along its own input wire into a dial. Each dial scales and sums those inputs, then passes a single signal to a switch—that switch is our neuron. Every neuron then sends its output along wires into the next layer of dials, which in turn feed their own switches. In this way, every neuron in one layer connects to every neuron in the next layer through its own set of dials (simplified visualizer below).

On top of those layers you have additional blocks in an LLM:

A Self-Attention block that dynamically adjusts which inputs it focuses on.

A LayerNorm block that keeps values from jumping too high or too low.

A Multilayer Perceptron (MLP) that finds deeper patterns the attention mechanism might miss.

You can read more about attention in Google Brain’s famous paper on the transformer architecture, Attention Is All You Need. After data passes through enough of these layers, the model produces its final, consistent output.

Now that we’ve taken a peek behind the curtain, it’s hard not to feel a little overwhelmed by the scale of it all. Imagine trying to follow billions of tiny dials twisting in perfect synchrony, thousands of switches firing in each layer, and then entire blocks of Self-Attention and MLP weaving their outputs into the next stage. The computer is churning through seas of matrix multiplications just to land on a single answer. For researchers hoping to trace the model’s private reasoning, that tangled web of hidden calculations can feel impenetrable.

Researchers at Anthropic found that individual neurons in a large language model don’t act like simple on-off gates for single ideas. Instead, they’re polysemantic, meaning each one can respond to many different concepts. You might see the same neuron fire for translating a French phrase and for listing popular dog breeds. That overlap makes it impossible to pin a single meaning on any one neuron. Anthropic researchers call this “superposition.” It happens because there are more concepts than neurons, so each neuron ends up carrying multiple ideas at once. Without a clear one-to-one link between neuron and concept, their activations become very hard to interpret.

Anthropic researchers realized that treating raw neurons as the smallest units just doesn’t work. Instead, they focus on “features” as the true building blocks. Much like biologists use cells to understand life, “features” reveal meaningful patterns inside the model. A feature is simply a detected pattern of concepts that, when activated, lights up a group of neurons all at once. By studying features rather than individual neurons, we preserve clear, human-readable meaning in the model’s core computations and make its “thoughts” far easier to trace.

But how do they find these “features” in a vast sea of neurons? Researchers built something called a “replacement model”, essentially building a translator called a “transcoder” (more specifically, a cross-layer transcoder) which takes the neuron activations of a model and translates them into these so-called features. During training, the transcoder adjusts itself to spot which neuron patterns belong together, fine-tuning its filters so each feature captures one clear concept. The result is a set of sparse, well-defined features that mitigate the superposition phenomena and make the model’s inner workings far more interpretable.

After pinning down features as the model’s basic units, the researchers wanted to see how those features flow and combine to produce an answer. They adapted an idea from electrical work called circuit tracing, where you follow wiring in a house to learn which breaker powers which outlet. However, they implemented it using attribution graphs, which is a type of graph that illustrates the model’s “forward pass” on an inputted prompt. We can think of a forward pass as the transition from one wall of switches to the next, where it passes through the layer of dials (our weights). In this specific attribution graph, however, the forward pass is not represented through nodes, but rather through features. Using directed “edges” (the wires connecting one switch to another) and features as the nodes (our switches), they indicate the influence of one nodes activation onto another, essentially attributing one feature’s activation to the activation of previous features (you can have a much better visualization of the graph in Figure 1 and Figure 5 of Anthropic’s paper).

This attribution graph consequently helped researchers identify Claude’s chain of thought, indicating the flow of how Claude processes inputs and what features it is reliant on to produce accurate outputs. To test whether or not certain features actually have an impact on others, they performed experiments that are also used by neurologists, called intervention experiments. These experiments consist of activating or suppressing certain features to see how it affects the final output, which ultimately helps researchers assess truly how much of an impact a feature has in the output of the model. An example Anthropic uses is in poetry, interrupting Claude’s chain of thought with other proposed words to see how it affects the output (you can see a visualization of this here).

With all of this foundation laid out, and the tools to accurately map what occurs when Claude reasons, we can begin to explore some of the key findings made by Anthropic’s researchers.

The Fun Part: Why Claude Might Have Abstraction Abilities

Using the tools developed in the previous section, Anthropic researchers published a follow-up paper titled On the Biology of a Large Language Model, where they explore some of their findings after studying Claude’s thinking process. From these findings, they discovered that the notion of Large Language Models as “next-token generation” machines is incomplete. Although the training goal is still next-token prediction, Claude often forms intermediate representations that let it plan ahead and break problems into conceptual steps instead of relying only on surface-level token probabilities.

For starters, Claude breaks down problems internally and implements “shortcut” reasoning, where it associates features to other features to arrive at an accurate response to a presented prompt. The example that Anthropic uses is with the prompt “What is the capital of the state containing Dallas?”. What Claude then does is break the problem down into two: first, it activates the “Texas” feature after finding which state Dallas is located in, then it activates the “Austin” feature once it finds what the capital of Texas is, outputting “Austin” (Figure 6). Returning to the attribution graph, here, Austin can be attributed to Texas since the Austin feature was activated by the Texas feature. This ultimately shows that Claude does not just recall a direct association between Dallas and Austin, or memorize a single fact, rather it has a way of interpreting the problem in an abstract space by breaking it down into multiple parts and then making a connection.

Next, and quite remarkably, they found that Claude has an ability to plan ahead. When prompted to write poetry, Claude was found to first have a target word that it wished to reach that rhymed with the one prompted by the researchers, and it would build the sentence around this word instead of generating the words one by one and ending up with a rhyme. Claude planned ahead for what word would make sense, which indicates an ability to “think” beyond what it has already generated but rather about what it wishes to generate. They then tested this by an intervention experiment, where they replaced the word it planned for with another word, and they found that Claude adapted to the change and built the rest of the sentence to harmonize with the inserted word.

Also, Claude seems to be multilingual, and has abstractions for concepts across languages. When prompted about what “the opposite of small” was across English, Chinese, and French, the model seemingly used the same features for the abstract concept of what entails “smallness”, “opposites”, and “largeness”, then proceeded to output an accurate response. Essentially, Claude assigns semantic attributes to words across languages, implying the existence of an internal conceptual space where words have meaning. This abstract space holds features that it can then apply to various languages, instead of having separate “meaning” space for different languages.

Crucially, especially when using Claude for daily workflows, researchers at Anthropic were able to document what causes hallucinations (generation of facts that are untrue) through analyzing the circuits which are activated when hallucinations occur. The researchers found a circuit that helps Claude decide whether it should or should not answer, depending on the familiarity of a prompt. There is a default circuit that is always activated, which is described as the “I can’t answer” feature (Figure 33). The assumption is that Claude is skeptical and unable to answer a prompt unless proven otherwise after analyzing the prompt and finding plausible evidence of familiarity. Hallucinations occur when this circuit fails or misfires, causing the model to mistakenly believe it knows something that it does not, therefore generating a false output.

Ultimately, it seems plausible that Claude has a lot more going on behind the scenes than simply probabilistic outputs based on generation of next tokens. Researchers seem to have found more semantic, abstract, and almost philosophical qualities in Claude, that permit it to effectively break down and interpret problems and to find “mental” shortcuts similar to the ones found in the human brain. These findings are indicative of a promising future for the autonomous, self-learning abilities of Large Language Models, where relatively minor human input is necessary for optimization.

Philosophically-Adept Artificial Intelligence?

Anthropic’s circuit-tracing results suggest that a large language model is more than an engine of utility; in order to predict the next token it must first locate that token inside an internal “conceptual manifold.” The model builds the very conceptual categories it later reasons within, organizing raw data into stable abstractions to optimize its thinking. The space of meanings that emerges is therefore not an add-on but a structural requirement of effective inference.

Studying this engineered space gives us a rare empirical foothold on questions long left to speculation. If qualitative distinctions, such as “smallness,” and “opposites” arise spontaneously in Claude from an effort towards error-reduction, then abstract, non-tangible human experiences may likewise be a natural derivation of neural information processing rather than evidence of some irreducible mental substance. Though the broader questions remain open, Anthropic’s methods unlock a powerful pathway for systematically mapping and understanding how language models think.

Written by Alejandro Alcocer

That’s it for this week. Make sure to subscribe to ConteNIDO to learn all things Mexico/US, AI, and innovation.

And, if you are a founder seeking to start a conversation, don’t hesitate to reach out: https://www.nido.ventures/contact